Department of Internal Affairs senior service designer Valerie Poort discusses the customer usability testing undertaken before the launch of a new online application service for citizenship.

In the first of 2 blog posts, Department of Internal Affairs senior service designer Valerie Poort discusses the customer usability testing done before the launch of a new online application service for citizenship.

Field notes on usability testing with our customers

I would want to know which translators are accepted by government. I would expect to see a list. I will need to call you to check.

Take a minute to remember the last time you needed to do something online, but you got super frustrated along the way.

That frustration you felt was likely not your fault, but because the digital experience wasn’t well designed for you.

At DIA, we’ve recently expanded the New Zealand citizenship application process from just a paper form to include a digital application service. As part of this change, we wanted to make sure the digital application process worked for our customers before we released it.

Over the course of developing our new digital citizenship application service, we observed a small group of customers testing the online application before we went live to approximately 35,000 applicants per year.

This blog post talks about our learnings and insights for successfully doing customer usability testing to ensure our customers have the best possible experience.

Identifying the users of our experience

Doing the identity check online was very convenient versus going in for an interview to prove who you are.

We observed customers using a test online application. The customers we recruited were planning to apply for, or had recently applied for, citizenship by grant.

When we undertake usability testing, we look for a range of participants who represent the different ways people behave when interacting with government online. Our different mindsets help guide the design process. You can read more about this in our previous blog post Using mindsets to guide service design.

During our latest round of testing in December 2019, our oldest participant, at 72, fell into our technology-savvy ‘self-server’ mindset. This shows that research assumptions about a user’s age and ability don’t necessarily correlate with observed behaviours and attitudes.

Facilitating the usability testing sessions

It says there is now 1 attempt left. If I fail again, hopefully it resets? I feel alarmed, if I fail again, I might not be able to do this online. Why is it failing me? I don’t know what to do!

The goal of our testing sessions is to understand where people may encounter problems and experience confusion. This allows us to look at what we need to address to make their experience easier when interacting with us.

Our Service Design team has user researchers based in multiple locations around the country (including regional NZ). This makes it easier to reach a more diverse group of people when we are doing usability testing. It enabled us to hold one-on-one, face-to-face sessions with customers around the country.

We’re testing the digital experience itself; it’s not a test of our customers’ abilities. We tell our customers that the more things they can identify as being confusing, the better. Just 1 customer query during usability testing, which we can then fix before release, potentially saves thousands of calls to our DIA Contact Centre.

Sometimes we ask our team members (such as UX designers, content writers, business readiness advisors, business analysts, and product owners) to come along to our sessions so they can see the issues first-hand. That’s important so they can understand first-hand how people are using the new citizenship application process, and why people are having trouble.

Sorting out the different issues we find

Plain and neutral background is vague to me. A brown background can be neutral. Should say white.

We’re close to the second release of the application and are focused on picking up 2 priority types of issues:

- where people would give up and stop their online application. (Critical issue)

- where people are very frustrated and don’t know how to use a certain part of the application — they might need someone to help them through. (Major issue).

There are also 2 other types of issues we look out for:

- where people have a little difficulty or a question that they’re easily able to find the answer to. (Moderate issue)

- where people have an opinion or preference that something should be a certain way. (Minor issue).

We also look for where multiple people have the same issue. The more people who experience the same issue, the higher the priority is to fix it.

Reporting back and fixing issues

This is silly — our whole family is already in the application, I’ve already told you we live together, I’ve already told you they are my children... [Why do I have to tell you again that we’re both the parents?]

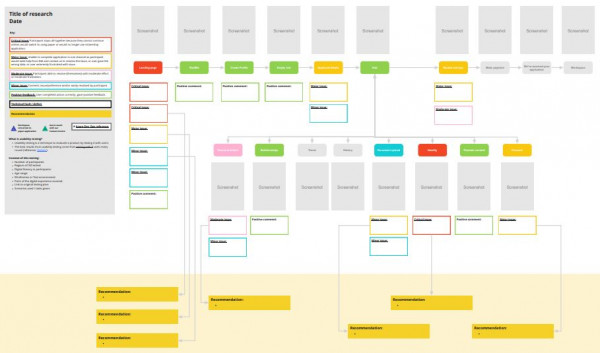

We use a visual map to communicate issues back to the product owner and our multi-disciplined development team. The map is made up of screenshots of the flow of the digital experience we tested. We populate major issues onto the map — usually excluding minor issues. Issues are colour-coded to make it easy to spot problem pages in the flow.

Everyone in the team attends the meeting where we discuss and then agree on how we might fix the key issues. We then work with the product owner to prioritise issues for immediate fixing. These are then written up as agile stories and worked on by the development team. Any other issues are catalogued in a backlog for future prioritisation.

Detailed description of figure

The image shows a research template with screenshots of the digital experience laid out in chronological order. Various issues are below each screenshot. Issues are colour coded depending on severity. The bottom of the template contains an area for recommendations relating to issues. The left of the template contains a key explaining the purpose of the research and what the colours of each issue mean.

Iterative testing cycles

I’m slightly confused now, seeing this new ‘personal details’ section. I’ve just added my personal details? I’d expect this to be filled out…

Usability testing doesn’t happen just once, it usually happens again and again. Our team conducts at least 1 round of usability testing after each feature is developed and before the feature is released. We might test something again if issues came up that needed to be addressed. Research shows that the best results comes from testing with 5 users and testing often. Read Jakob Nielsen's article on why you only need to test with 5 users

Our adult citizenship application has gone through 9 rounds of usability testing so far, as different parts and features of the online application have been developed.

When possible, before a digital experience is even built, we also do user research with paper wireframes and draft content and process. We might do this several times to make sure nothing is missing, and to answer any questions the UX designer, product owner, or content writers may have about how something needs to be designed for their customer.

Continuous cycles of talking with people about the service they end up using means we know how to release the best digital service possible for our customers and DIA citizenship officers. Our team has now released the online application for citizenship by grant for adults. We’re looking forward to releasing other types of citizenship by grant applications very soon.

We’ve recently published a blog post on the content design challenges for our new online citizenship application.

In a future blog post, I will talk about working with our own DIA people to successfully conduct usability testing of our new internal system.