Digital Service Design Standard – Recommendations for Assessment and Reporting Models

Executive summary

The Digital Service Design Standard (the Standard) was published in mid-2018. The New Zealand Government made a commitment under the Open Government Partnership to publish a preferred assessment model for the Standard by June 2019.

This paper presents recommendations for a framework for assessing and reporting on agency use of the Standard, and provides further recommendations on the Standard itself, as well as ways of better enabling the implementation, use, and support of the Standard.

Key recommendations include:

- updating the Standard to include clear, specific, measurable outcomes which can be assessed and reported

- establishing consequences for non-compliance

- implementing an assessment model that would see varying levels of reporting and assessment depending on the volume and impact of the services being measured

- providing resources to raise awareness and support the implementation of the Standard in agencies.

Introduction

In April 2019, Think Tank Consulting Limited was engaged to run workshops and help frame how government agencies might best assess their implementation of the Digital Service Design Standard (the Standard). This work was commissioned by Government Information Services (GIS) at the Department of Internal Affairs (DIA) and performed by Dave Moskovitz.

This report makes recommendations based on feedback from the workshops on how the Standard should be assessed and reported, and how it should evolve.

The New Zealand Government (the Government) is a member of the Open Government Partnership (OGP),an international Non-Government Organisation (NGO) whose website describes itself as “an organization of reformers inside and outside of government, working to transform how government serves its citizens”. There are currently 79 countries which are members of OGP.

Each government member makes periodic commitments to the OGP. The Government made 12 commitments in the 2018-2020 OGP National Action Plan covering the themes of participation in democracy, public participation to develop policy and services, and transparency and accountability.

Commitment 6, with respect to service design, is:

“To develop an assessment model to support implementation of the all-of-government Digital Service Design Standard (the Standard) by public sector agencies ”

| Milestone | Start date | End date |

|---|---|---|

| Identify suitable assessment (conformance) models for supporting agency uptake of the Standard, including options for assessment and measurement of performance against the Standard | August 2018 | March 2019 |

| Publication of preferred assessment model for implementation | April 2019 | June 2019 |

| Public engagement on a refresh and review of the Digital Service Design Standard | December 2019 | June 2020 |

1. Methodology

The work underlying this report was divided into four phases: identifying stakeholders, running workshops with self-selected respondents, surveying the workshop participants, and analysis and reporting.

Identifying stakeholders

Stakeholders were invited to participate through a number of sources broken into two streams: public sector, non-public sector.

Workshops were advertised through:

- A list provided by DIA’s relationship managers

- The Digital.govt.nz Discussion space

- NZ GovTech meetup,

- NZ GovTech slack

- Various social media.

Workshops

Workshops for public sector people were run during May 2019 in 6 sessions at DIA in Wellington, as well as a session in Auckland, and an online video conference session.

Approximately 65 people came to the public sector workshops from the following agencies.

- Ministry of Justice

- Department of Internal Affairs

- Christchurch City Council

- Museum of New Zealand Te Papa Tongarewa

- Statistics New Zealand

- Land Information New Zealand

- New Zealand Transport Agency

A private sector workshop was run at a NZ GovTech Meetup on 14 May, which was attended by 11 people.

The workshops covered an overview of the Standard, and encouraged participants to discuss their own level of awareness of the Standard, how they used it in their agency and/or unit, the value of the Standard, and how they thought it should be reported and assessed. The workshops also elicited feedback on each of the principles in the Standard.

Free-form notes were taken during the workshops which are summarised in Appendix A.

Surveys

In addition, these participants were asked to fill in a survey. Depersonalised raw data from these forms is available upon request.

The survey covered a range of topics from the workshop, including use and awareness of the Standard within agencies, the value of the Standard, and how the Standard should be reported and assessed. The survey results are summarised in Appendix B.

Analysis and reporting – Assessment model

The Purpose, Scope, and Development section of the Digital Service Design Standard website proposes models for discussion for reporting and assessment of the Standard, including the following.

- The Standard is a discretionary resource to inform government agencies when designing services, with a suite of reference guidance supplied.

- The Standard is a discretionary standard supported by a self-reported, self-assessment maturity model, with a suite of reference guidance supplied.

- The Standard is a discretionary standard underpinned by a centrally-reported, self-assessment maturity model, and supported by centralised support resources.

- The Standard has a centralised mandated governance model (e.g. design authority) and supporting conformance structures.

The same web page notes that “these discussion models are not final options; they are primarily a mechanism to stimulate debate and surface potential barriers, concerns and opportunities.”

The above models were discussed at the workshops, and also included in the post-workshop surveys.

2. Findings and recommendations

2.1 The Digital Service Design Standard is highly valued

The workshops and survey revealed a high degree of appreciation for the Standard, and nearly all participants want to see the Standard used more widely. Despite a generally low level of awareness, the workshop participants were grateful to have a chance to discuss the Standard and learn more about it in the workshops.

2.2 In its current form, it’s not a standard

One of the overriding comments coming out of the workshops was that the Standard is a worthy set of principles, but it is not a standard, as it lacks clear, measurable outcomes that must be met to comply.

Recommendation 1: Add specific measurable, assessable, reportable outcomes to each principle in the Standard.

It would be difficult to provide an assessment and reporting framework without specific points to assess and report.

The Principles as they appear on the digital.govt.nz website contain headings for “what you should be able to demonstrate or describe” but most of the items do not contain specific measures or enough links to supporting information that would help someone produce a reliable, repeatable, comparable assessment or report, whether that was someone self-assessing or an assessment being performed centrally.

2.3 Unless it’s mandatory, it won’t get resourced

Comments from the workshops as well as data from the subsequent survey were overwhelmingly in favour of at least some mandatory compliance elements, as participants felt that unless it was mandated, compliance would not be resourced. Furthermore, many workshop participants thought there should be consequences for non-compliance, citing the example of the Web Accessibility Standards which are mandatory but without consequences for non-compliance, are not widely implemented.

Recommendation 2: Establish thresholds for mandatory assessment and reporting

The compliance burden should be commensurate with the importance and impact of the service in question. A set of thresholds should be developed determining the level of assessment and reporting required based on a number of factors. As an example, factors might include:

- development cost of the service

- transaction volume of the service

- target audience (e.g. internal vs external, general public vs groups with specialised skill sets, etc)

- impact of impaired access to the service (e.g. prevents the collection of benefit vs minor inconvenience).

Depending on these factors, the agency might be able to bypass assessment or reporting for trivial and inconsequential services, undergo a “light” assessment and reporting regime for infrequent and noncritical services, or a full regime for high volume critical services.

The light regime could include self-assessment against a maturity model, and might also include random but infrequent audits by a central agency.

The full regime would involve mandatory central assessment and central reporting. The agency providing the central assessment and reporting should be the Department of Internal Affairs (DIA).

Recommendation 3: Phase in mandatory compliance

Once the thresholds have been set, they should be phased in over a period of 2 years, and be in place by 2022. During that period, agencies should self-assess and self-report to build capability in preparation for mandatory compliance. This would also give the central agency time to build their own capability and resources.

Recommendation 4: Establish consequences for non-compliance

There was general agreement in the workshops that consequences to noncompliance sharpen the focus considerably.

Consequences could include:

- increased remedial support

- visibility of non-compliance

- withholding of project funding

- performance management.

Recommendation 5: Require standards compliance in all Requests for Proposals (RFPs)

Vendors providing services that meet the thresholds above should be required to implement the revised Standard. This should be added to the Government Procurement Rules at its next revision.

2.4 The Standard should be easy to learn about, understand, and comply with

Awareness of the Standard within agencies is low, with very few workshop participants saying that their agency was using the Standard, and the majority of the rest saying that they were unsure whether or not their agency was using the Standard.

Many participants also said that they were not aware of any resources available to help them upskill themselves, or support them in providing better services.

Recommendation 6: Run an awareness campaign

With so few agencies either aware of or using the Standard, there is clearly a significant opportunity to increase awareness of the Standard. This will be particularly important if compliance should become mandatory in the future. Mid- and Senior-level managers should be actively targeted in this campaign.

Recommendation 7: Refactor the Standard to make it clearer, adding best practice and case studies, and taking note of specific feedback in Appendix C.

Some of the principles related to openness, reuse, and transparency could be combined. Showing people examples of best practice and case studies would go a long way to help them understand what’s involved beyond theoretical principles.

In the workshops, participants provided specific feedback on the principles, which is included in Appendix C.

Recommendation 8: Provide education and ongoing support

Suggestions from the workshops and survey results included providing a getting started guide to help people take their first steps with the Standard, more documentation, courses, and e-learning. A maturity model would also help agencies decide where best to put their resources.

Central support, including passive support such as more information on the website, and active support such as consulting services, would help agencies improve the quality of the services they provide to meet the Standard.

There should also be an organised community of practice resourced by DIA around Service Design and the Standard. There are a number of existing support groups around government such as the Open Government Ninjas, the GovtWeb Yammer, the Digital.govt.nz Discussion space, and the Service Design Network, but none specifically devoted to the discipline of service design in government. Resources should be made available to help the service design community within government become better organised so that they can share resources and best practice.

3. Conclusion

Awareness of the Digital Service Design Standard is low, but it is highly valued by those who discover it.

This paper recommends that the Standard should undergo a minor revision, supplemented with case studies and specific measurable outcomes in order to go from being a set of principles with some guidelines to become a true standard.

Once these specific, measurable outcomes are in place, agencies should go through a 2-year period of self-assessment and self-reporting, building up to mandatory threshold-based assessment and reporting, to be performed centrally for services that meet the threshold.

Vendors should be required to adhere to the standard.

Significant resources should be put into raising awareness of the Standard, providing education and skills development for service designers, and ongoing support provided both centrally and by the service design community.

Appendix A: Analysis of interactions from the workshops

1. Low awareness but high appreciation of the Standard

Although many workshop participants were aware of some of the principles in the Standard, a large majority had not read the entire Standard. There were many comments at the workshops about how important the Standard is, and how it should be applied more widely. These comments are backed up by the survey data.

2. It’s not a standard, it’s a set of principles

Many participants appreciate the Standard, but a common theme of feedback in the workshops was that it isn’t really a standard. A standard would have specific, measurable outcomes which projects should be held against. One participant suggested that any measures should be Specific, Measurable, Attainable, Relevant, and Timely (SMART).

3. Should it be a Digital Service Design Standard, or just a Service Design Standard?

Some participants questioned why the Standard in question is a digital standard – the principles are equally applicable to non-digital services.

4. Service design is a relatively new discipline, and it can be difficult to get people with the right skills and capabilities on projects

A number of participants felt that the service design function in their agencies was under resourced, especially for the increasing workload moving their agencies ever more online. One participant said that in their agency, the terms service design and business analysis were used interchangeably, and that digital transformation was “mainly IT-led with some service design slapped on at the end”.

5. People don’t know where to start

A number of participants said that they would like examples of best practice as well as guides for how to take the first steps toward designing better services. The suggestion was made that e-learning modules could be made available to upskill.

6. People don’t know where to go for support

Participants said that they don’t know where to go for support with issues related to the Standard, and that it would be great to have some centralised support available, perhaps from DIA, along with a community of practitioners for reference and support.

7. Priority will be low unless there are consequences for not complying

In the workshop sessions, there was a clear desire in the room for there to be a mandatory element to the Standard, and that the mandatory element should come with consequences. Discretionary items are usually the first thing to go when budgets are under pressure, or when faced with difficult choices. Participants commented: “what doesn’t get measured doesn’t get done”, “if it isn’t mandated, it won’t be funded”, “In an ideal world, it would be completely discretionary. But we don’t live in an ideal world”, and “This should be mandatory – I’m tired of waiting.”

An experienced participant added, “Even though web standards are mandatory, they haven’t been implemented because there are no consequences. One of the consequences could be getting support. The trick is getting agencies to recognise value, and finding out what help they need to successfully implement. We don’t want to give the Standard a bad name by forcing people to use it in a way that results in pain”.

8. Comparing ourselves to others

Several workshop participants suggested that it would be good to have maturity models for agencies to rate themselves, and work toward gradual improvement. Some were strongly in favour of league tables so that agencies that did not comply could be named and shamed. This was a divisive topic as others were strongly opposed to league tables and encouraged a more supportive approach to naming and shaming.

9. Self-assessment questioned

Some participants compared self-assessment to letting kids mark their own homework. One way to mitigate that risk could be to allow self-assessment for projects meeting certain criteria, but centrally assess a random sample of those to validate the quality of self-assessment. Another participant suggested peer review of assessments.

One participant suggested that because of the large variation in context, capability, and practice between agencies, that static measures were not as valuable as helping agencies measure their progress and improvement.

10. Assessment and reporting could be phased in over time

Some workshop participants said that should anything other than a completely discretionary assessment and reporting framework be put in place, that adequate time and preparation would be required by agencies, and suggested that any non-discretionary regime be phased in over time.

11. Assessment and reporting burden must be sensible

One participant suggested that assessment and reporting for the Standard should fit in with other assessment and reporting requirements. Another requested that whatever framework is decided not be resource intensive, to “make it easy to do the right thing”.

12. Centralised functions add rigour

One participant suggested that centralised assessment and reporting would add rigour to the process, and give confidence that the same things were being assessed and reported.

13. Senior and middle managers need to buy in to the Standard

A number of participants said their senior and middle managers are not aware of the Standard and its importance, and that management buy-in would be required to secure the resources required to implement the Standard.

14. Standard compliance should be specified in Requests for Proposals (RFPs)

Several participants said that compliance with the Standard should be specified in RFPs, but that clearer measures would be required first.

Appendix B: Survey Results and Analysis

1. Participation

There were 30 respondents to the public sector surveys, which comprise the following agencies.

- 9 from DIA.

- 6 from the New Zealand Transport Authority (NZTA).

- 2 each from Christchurch City Council and WorkSafe.

- 1 each from: Archives NZ, Better for Business, Inland Revenue, Land Information New Zealand (LINZ), the Ministry of Business, Innovation, and Employment (MBIE), the Ministry of Justice (MOJ), the Ministry for Primary Industries (MPI), the Ministry of Social Development (MSD), the National Library, Statistics NZ, and Te Papa.

Over half of the responses came from a combination of DIA and NZTA.

The non-public sector respondents came from 6 different companies, including Antipodes NZ, Forsyth, Open Data Model, Optimation, Paperkite, a freelancer, as well as two public sector people from DIA and MPI.

It’s worth noting that this is not a representative sample.

Analysis

There is no central register of public servants with an interest in service design, and it is unknown who in central government should be considered a stakeholder in the Standard. The methods used to contact potential stakeholders were opportunistic. That said, the participants who came from the workshop and submitted survey respondents were enthusiastically supportive of the Standard. Many were grateful to be able to get a better understanding of the Standard, and wanted to learn more.

2. Use and awareness of the Standard in agencies

When asked, “Is your agency/unit using the Digital Service Design Standard?”, four participants responded “yes”, 12 people responded “no”, and 14 were “not sure”.

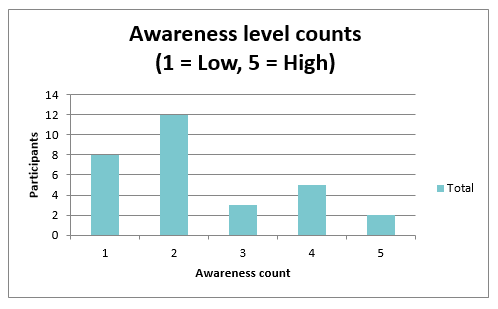

This bar graph shows the participants’ responses when asked about the awareness level of the Standard in their agencies. The scale was 1 to 5, where 1 is “no awareness” and 5 is “top of mind”.

Detailed description of graph

This bar graph shows participants’ level of awareness of the Standard. 8 participants said they are a 1 on the scale and have no awareness level, 12 participants said they are 2 on the scale and have low awareness, 3 participants had an awareness level of 3 and are somewhere in the middle, 5 participants had an awareness level of 4 and have a good understanding, and 2 participants said they have a high awareness level of 5 indicating the Standard is top of mind.

Analysis

The level of awareness of the Standard within agencies from whom the already interested and motivated stakeholders came to the workshops is low, with a median score of 2, and an average of 2.3. It is likely significantly lower in agencies and units who did not send participants to these workshops.

There is clearly a low level of use in the Standard in the public sector, with only 4 public sector participants saying that they knew the Standard was being used in their agency or unit, with the remainder 26 saying that they were unsure, or that the Standard was not being used.

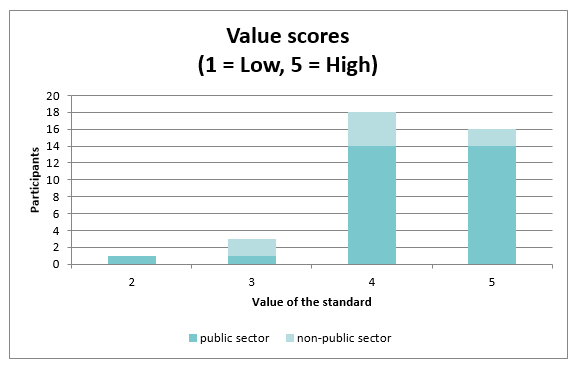

3. Value of the Standard

This bar graph shows the participants’ responses when asked how valuable they find the Standard. The scale was 1 to 5, where 1 is “no value at all” and 5 is “extremely valuable”.

Detailed description of diagram

This bar graph shows how valuable the participants find the Standard. 1 participant form the public sector gave it a 2, a total of 3 participants gave it a 3 (1 public sector and 2 non-public sector, a total of 18 participants gave it a 4 (14 public sector and 4 non-public sector), and 16 participants gave it a 5 (14 public sector and 2 non-public sector).

Analysis

Participants clearly found the Standard very valuable, both in the public sector, and outside the public sector, with an average score of 4.3.

4. Future plans

| Responses | No. of Participants |

|---|---|

| We plan on applying it more widely. | 7 |

| We'd like to explore applying it in new areas. | 5 |

| We plan on applying it to everything we do. | 5 |

| Needs further discussion. | 1 |

| I would, but not sure what the appetite in the agency would be. | 1 |

| "Standard" is a derivative from long-existing overseas standards and used as best practice. If elements of the standards are not used there is a reason for it. | 1 |

| If we're going to promote it, we should be applying it to everything we do. I'm not sure of our actual plans, however. | 1 |

| I would love to. Outside of my team, I am not sure if more than 3 people at my agency knows it exists. | 1 |

| Newcomer to the agency, would like to think we could apply the principles. | 1 |

| I'm not aware of how we'd incorporate it into our work. | 1 |

| We'd like to discuss with senior managers about applying it across the organisation. | 1 |

| I'm not sure what "we" plan on doing but I hope that "we" plan on applying it more widely. | 1 |

| Would love to but influence is needed at a higher level and in the business units. | 1 |

| Making sure all staff who work with digital content are aware of it will be a good start. | 1 |

| More communication from DIA on implementation expectations. | 1 |

Analysis

A significant majority of workshop participants would like to see the Standard used more widely. However they noted that this would be difficult without greater awareness, particularly from senior managers, and support from DIA.

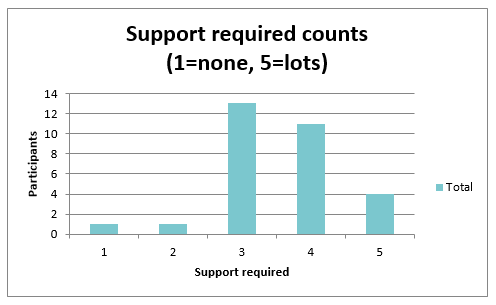

5. Additional support required

This bar graph shows the participants’ responses when asked “What level of additional support and resources do you need to meet your planned implementation of the Standard?”. The scale was 1 to 5 where 1 is “We don’t need any additional support and resources” and 5 is “We need lots of additional support and resources”.

Detailed description of graph

This bar graph shows how much support participants said they need to implement the Standard. 1 participant chose a 1 for no support required, 1 participant gave a 2 for little support required, 13 participants gave a 3 for some support required, 11 people gave a 4 for needing support and 4 participants gave a 5 for needing lots of additional support.

Asked for more detail on what kind of additional support participants would like, here are some of the respondent’s answers.

- Joint working group to share ideas and lesson learned.

- Business case, some level of external checks/audit, good marketing, versions aimed at exec/business unit level.

- Mostly advice and the provable knowledge that other organisations are taking the initiative and are adopting this.

- More resources.

- The agency’s currently refactoring a lot of its business processes as is, but extra resource would be needed on baselining and, potentially, integrating the Standard across the agency.

- Resources that help people to understand why this is the Standard — the department do a good job of making sure it discusses why we are inclusive of te reo Māori and other cultures but I’ve struggled a lot with discussions around literacy and the use of plain English and accessibility.

- We will need to continue building internal capability, and integrate more closely with procurement to ensure external suppliers of design services use the Standard.

- webinars/videos et al.

- Not support or resources, but expectation of implementation and any upcoming assessment/measuring. A simple assessment framework as a starting point.

- A mandate; endorsement from leaders; a way to measure it; educating agencies through workshops and other collateral.

Analysis

Participants would clearly like significantly more support than there is currently to help them implement the Standard. This comment sums it up:

“[We need a] mandate; endorsement from leaders; a way to measure it; educating agencies through workshops and other collateral.”

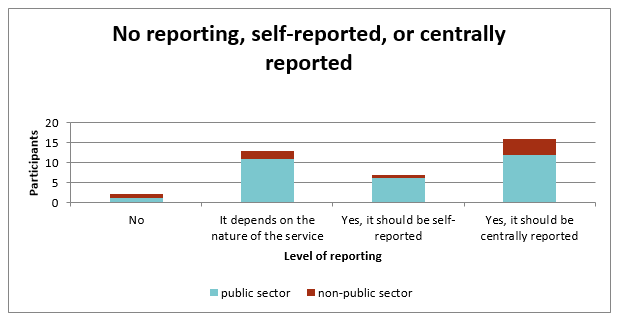

6. Reporting

This bar graph shows the public sector and non-public sector participants’ responses when asked “Do you think government agencies should report on their use of the Standard?”.

Detailed description of graph

This bar graph explains the level of reporting on the Standard that participants believe there should be. 2 participants (one public sector and one non-public sector) said that no reporting is required, 13 participants (11 public sector and 2 non-public sector) said it depends on the nature of the service, 7 participants (6 public sector and 1 non-public sector) said yes, it should be self-reported and 16 participants (12 public sector and 4 non-public sector) said yes, it should be centrally reported.

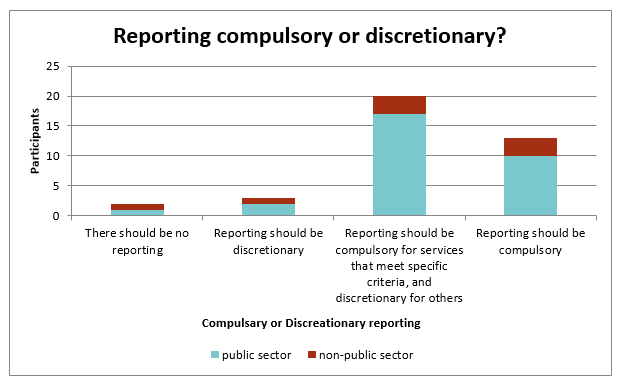

This bar graph shows the participants’ responses when asked “Should reporting be compulsory or discretionary?”.

Detailed description of graph

This bar graph explains whether participants believe reporting should be compulsory, discretionary, or somewhere in between depending on requirements. 2 participants (one public sector and one private sector) said there should be no reporting, 3 participants (two public sector and one non-public sector) said reporting should be discretionary, 20 participants (17 public sector and 2 non-public sector) said reporting should be compulsory for services that meet specific criteria and discretionary for others, and 13 participants (10 public sector and 3 non-public sector) said reporting should be compulsory.

When asked what should be reported, some of the participants replied with the comments that follow.

- It depends on the service, but at least the following.

- What Standard(s) was to be applied to a service and why.

- How the Standard(s) are to be applied.

- Expected outcome.

- Actual outcome.

- Lessons learned: what went well and should be repeated, what went wrong and how to resolve it better next time.

- Performance against the principles, with qualitative examples of benefits.

- All online services.

- Web standards assessment, though whether self-reported or mandatory this should be refined to encourage completion rates.

- Results from assessment.

- It should be self-reported, but centrally assessed. Agencies should report on how they meet the principles. A checklist with evidence referenced for central review (as required).

- That we're monitoring service use and uptakes and making continuous improvements.

- How and what is implemented.

- The projects that we used the Standard on, and how we have attempted to address each principal.

- I liked the idea of the maturity model — not everyone will be able to implement and meet these standards immediately, it’s a big change in the way a lot of content and services are produced and delivered. Have a step change model gives agencies goals to work towards. I also think that awards or some form of recognition of achievement is a great driver for implementation

- Level of openness/transparency (Principle 7 and 12) and the level of accessibility/ethics considered (Principle 4).

- While use of the Standard depends on the nature of the service, central reporting will allow a comprehensive exploration of areas where the Standard might be employed, and create a basis for comparative analysis, whereas self-reporting would result in inconsistent interpretation/application of the Standard. Comparative reporting of “current state” should be primarily for internal purposes to identify and drive areas for improvement, i.e. NOT published as league-tables, whereas year-on-year (or reporting period - on reporting period) changes should be made public to serve as inspiration and incentive to improve.

Analysis

Participants believed that overall, there should at least be a compulsory element to reporting, and whether the reporting was done by the agencies themselves or centrally depends on the nature of the service being reported.

7. Assessment

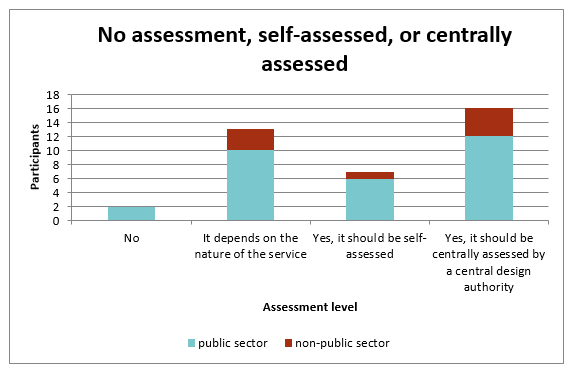

This bar graph shows the public sector and non-public sector participants’ responses when asked “Do you think government agencies should be assessed on their use of the Standard?”.

Detailed description of graph

This bar graph shows whether participants believe there should be any assessment on the use of the Standard, and the level of assessment. 2 participants (both from the public sector) said no assessment required, 13 participants (10 public sector and 3 non-public sector) said it depends on the nature of the service, 7 participants (6 public sector and 1 non-public sector) said yes it should be self-asssessed, and 16 participants (12 public sector and 4 non-public sector) said yes it should be centrally assessed by a central design authority.

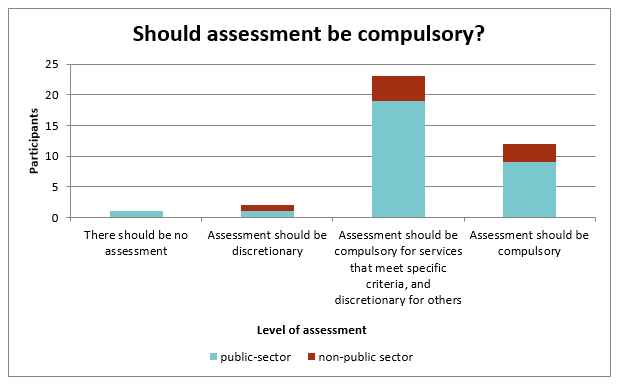

This bar graph shows the public sector and non-public sector participants’ responses when asked “Should assessment be compulsory across government?”.

Detailed description of graph

This bar graph shows whether participants believe that an assessment should be compulsory, or if no assessment is required. 1 participant (public sector) said that there should be no assessment, 2 participants (1 public sector and one non-public sector) said assessment should be discretionary, 23 participants (19 public sector and 4 non-public sector) said assessments should be compulsory for services that meet specific criteria and discretionary for others, and 12 participants (9 public sector and 3 non-public sector) said assessments should be compulsory.

When asked what should be assessed, some of the participants replied with the comments that follow.

- How we used it and could utilise [sic] it better.

- As above — compliance in specified services.

- Critical systems that are used across many organisations should be assessed, though some will be bespoke or plain (i.e. non-critical) and assessing them will be of limited or no value.

- If it is critical and common, it should be assessed.

- Publicly-popular services like apps and websites should be assessed too, as they are single-points of entry for the public and that is who government should be supporting.

- Accessibility and usability.

- Agencies should be assessed on whether/how they meet the principles.

- Set out measurable objectives based on the principles.

- On overview of factors that impact the people using the service. It’s a big question, though, since the variety of services to be covered would be vast. It should also consider affordances with related physical services and take a holistic view of how they overlap. For example, in our Library, we offer a digital service for requesting the delivery of physical materials. That digital experience lives in the website, but the whole of the experience includes finding, requesting, receiving, using, and returning those materials.

- The entire standard. The reason it should be centrally assessed is to ensure the assessment is rigorous and standardised. Self-reporting is often problematic in that regard.

- Methods and rigor around service/product creation e.g. evidence of user engagement, co-design, integration/consideration of te ao Māori, consideration of non-digital. Other data around foundations (accessibility, privacy, security) should already be available.

- How well our guidance/educational resources adhere to the Standard (if at all), particularly around the use of te reo and accessibility.

Analysis

Similar to reporting, participants felt that there should be a compulsory element to assessment, and whether that assessment was done by the agencies themselves or centrally depends on the nature of the service.

Appendix C: Feedback on specific principles in the Standard

During the workshops, each of the individual principles was discussed. Participants offered the following feedback on the principles.

Some principles could be refactored

A number of principles cover similar ground, and could be combined, e.g. 7: Work in the Open, 8: Collaborate widely and enable reuse by others, and 12: Be transparent and accountable to the public.

Principle 4: Be inclusive, and provide ethical and equitable services

Many participants felt that there was a drive to create “flashy” new services, and that accessibility, especially for people with sensory disabilities, is frequently deprioritised despite the Web Accessibility Standard being mandatory. The reason cited was that there was low awareness of the Web Accessibility Standard, likely due to the lack of consequences of noncompliance.

One participant said that little regard is given for people who aren’t fully digital, and that agencies “…need more justification to spend time on this as it doesn’t bring benefits to the agency, eg lower cost to serve.”

Another participant noted that literacy level should be addressed as part of accessibility.

And yet another suggested that end-users from target audiences should be involved in service design “right the way through”.

Principle 5: Design and resource for the full lifetime of the service

Participants in every workshop commented on Principle 5, pointing out that this is only very rarely achieved. One said, “nothing is ever funded or developed beyond the MVP stage – all we are left with is a pile of MVPs”.

One participant suggested that “we should focus on a mindset of continuous improvement rather than delivery and maintenance. Users change and ongoing needs change”. This could be incorporated into a lifetime funding perspective.

Another participant said, “Typically you need to whittle budget down to exclude things like maintenance, fixing bugs, making it pretty …. We have deep systemic issues with the way we fund work”.

Principle 6: Create and empower an interdisciplinary team

Some participants came from very small units — some were the only person managing the online presence for their area. Any team would be great for them. Establishing an active inter-agency service design community could be a useful way for them to obtain support and other perspectives.

A few participants in separate sessions suggested that people responsible for the ultimate business-as-usual delivery of the service should be involved in the design of new services, emulating a “devops” approach to service design.

Principles 7 and 8: Work in the open, and collaborate widely, reuse, and enable reuse by others

A number of participants noted that it’s not cheap to collaborate well or develop components so that they are usable outside the immediate project context, but that the investment was nearly always worth it. In the words of one participant, “This needs to be driven from the top.”

Another participant said, “This should include sharing your learnings widely. What you learn is as important as what you build.”

And another suggested that there be a central repository of service design tools, templates, and other artefacts.

Principle 9: Design for our unique constitutional and cultural environment

Some participants felt that Te Tiriti o Waitangi should be mentioned in the title of this principle. Others added that doing bilingual sites is hard, and projects are not typically funded for that. One participant commented that “We’re missing models for authentic engagement. We don’t want to be cultural tourists.”

One participant pointed out that Māori data sovereignty is a difficult issue which should be mentioned in this principle.

Principle 10: Use digital technologies to enhance service delivery

This is the only Principle that specifically mentions digital. Many participants felt that the main drive of digital technologies in government was to reduce costs and streamline back-end processes, and that user experience was frequently only considered at the end of the project. Greater awareness and mandate of the Standard could help to ensure that digital technologies are used to enhance the service experience for citizens.

Principle 12: Be transparent and accountable to the public

One participant remarked that “Comms departments make this really difficult. Comms should be a support function, but often ends up being a control function.”

Last updated