When Govt.nz launched in July this year we’d already completed several rounds of user research. The design of the site and the layout of the homepage had changed a lot since we launched the first beta version the previous year.

How users are getting to our homepage

After several months, we started to see some interesting patterns in our website analytics. A lot of people were searching Google using terms like:

- “new zealand government” (and various alternative versions)

- “nz government website”

- “nz government departments”

- “govt.nz” (and various alternative versions like “govt.co.nz”).

Most users start their visit to Govt.nz elsewhere on the site, but many will view the homepage as part of their visit. In a typical 2-week period we get 20,000 page views for the homepage in total, and more than 5,000 of these visits start on the homepage. You can see other stats for Govt.nz on our analytics page.

For users that do start on the homepage:

- 85% of visitors are “new” — but users can have more than 1 device, use different browsers, or they might clear tracking cookies off their machines — so this isn’t an accurate measure.

- On average, users looked at 3 or 4 pages of content.

- 6 out of 10 visits would lead to users exploring the site; 4 in 10 visits though resulted in a “bounce” off the homepage. A bounce means users would only look at that page and then leave the site without any further interactions.

Are we meeting user needs?

From this data it looks like there’s a group of users we’re not helping. Are they looking for announcements published by the Government of the day (eg beehive.govt.nz content)? Are they looking for information about government services and not seeing what they want?

Although we’ve had a wide range of feedback on the site design, we wanted to get a better understanding of users’ needs when visiting the site. We decided to run some A/B tests. To be specific, it was an A/B/C/D/E test. We left the original homepage in place, but randomly showed users 4 other homepage variations with different blocks of homepage content and images. By mixing up different combinations of a few changes we could test whether the position of content, or the content itself was factor in reducing the homepage bounce rate.

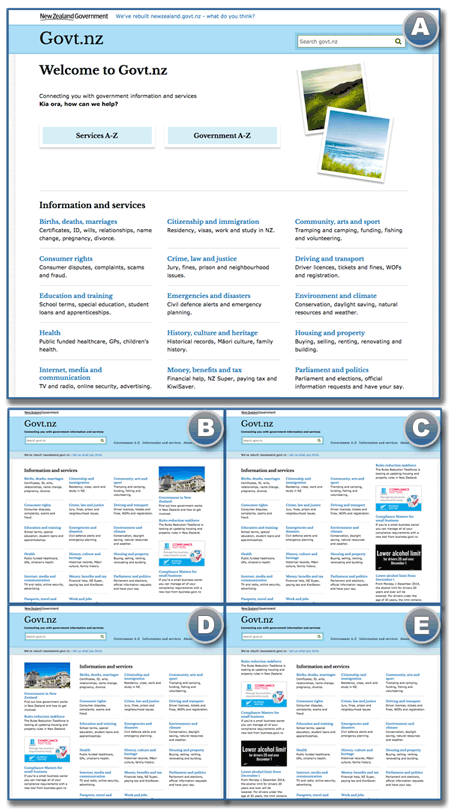

During A/B testing of the Govt.nz homepage we used the following versions:

- Version A — the original homepage with a decorative image on the top right of the page, and links to the main categories on the site in 3 columns for desktop users

- Version B — promo stories on the right-side of the page, and a promo story that featured an image of the Beehive (the New Zealand Parliament buildings)

- Version C — similar to version B, but the promo story that featured the Beehive image was put lower on the page

- Version D — promo stories on the left-side of the page, and like Version B, the Beehive promo story featured at the top of the list

- Version E — similar to Version C, promo stories on the left side of the page, but with promo stories in a different order

Version B was the most successful variation of the 4 designs tested.

If you’ve visited the Govt.nz homepage in the last few weeks you may have seen one of these variations. A big thanks from us because you’ve helped us get a better understanding of how people interact with our content. You’re also not alone: the experiment ran more than 16,500 times.

The tools

Govt.nz uses Google Analytics (GA) to track what users do on the site. GA includes content experiment tools that are pretty easy to set up:

- Decide on what design variation you want to test and what your measure of success will be – we wanted to find out which version would give us the biggest improvement in the homepage bounce rate.

- Create your pages, each variation needs to have its own unique URL.

- Load all this information into the content experiment tool, Google will give you an “experiment key”.

- Get the tracking code installed on the original version of the page you want to do the experiment on.

- Activate the experiment in Google Analytics — you should start to see early results within 24 hours.

We use a common analytics tracking code across all our environments. Inside Google Analytics we use a single account, a single property and then different profiles (views) to filter out traffic for each environment into separate reports. This meant we could test both the new versions of the pages, and the updated tracking code before putting it on to the live site.

Interpreting the data

You need to make sure you let the experiment run for long enough to see if there are any patterns.

Collect data for a few weeks

I’m glad we ran the test over 4 weeks, because during the test:

- the results for each design changed on a daily basis

- the early results looked promising, but over time the differences between the variations became smaller and smaller

- we could see if there were any differences in user behaviour during the week, or at weekends, during business hours, or visits happening when people were sitting on the couch at home after dinner.

Data, data and MORE DATA!

Getting the basic results is straightforward. The experiment tool has a built-in report that gives you all the numbers for things like bounce rate, length of visit, and it even reports against any site goals you might have set up.

Besides the bounce rate, I wanted to see which variation of the homepage worked better using these metrics:

- average length of visit (session duration)

- goal — 5 minutes or more on site

- average number of pages viewed in a visit

- goal — user views 3 or more pages during their visit

- goal — how many people find content and a link to an agency website and then follow that link. We call that “agency referral” (and it’s one of the main reasons why Govt.nz exists).

The experiment tool also lets you apply any custom segments that you use on other Google Analytics reports you might have set up. Govt.nz has a number of custom segments we use for our internal reporting. A few of the main segments I thought would be interesting to apply to the homepage experiment were:

- Desktop, tablet and mobile use

- Organic search (ie Google and other internet search engines), referral traffic, and direct traffic

- NZ users vs overseas.

Patterns, trends and bringing order to the universe

As an information architect I found myself swimming in data.

I had to map it all out in a spreadsheet with all the numbers. Then I used a simple ranking to show up patterns across the 5 versions of the homepage, compared to each metric and segment we were exploring. Looking at the ranking made it easier to see patterns, particularly something in the results that stood out as unexpected or unusual.

Adding a colour coding to each rank made the comparison between versions even easier. I chose a range of green (meaning a high ranking), through to red (meaning a low ranking). Just because one of the homepage versions ranked "in the red" didn't mean the result for that version overall was poor, just that it was lower than the others. You can use any colours you like, provided you apply them consistently.

An example of the data analysed

| User Segment | Version A | Version B | Version C | Version D | Version E |

|---|---|---|---|---|---|

| All users (average) | 10 | 8 | 9 | 6 | 14 |

| Desktop | 3 | 2 | 4 | 5 | 7 |

| Tablet | 16 | 15 | 11 | 1 | 13 |

| Mobile | 18 | 17 | 19 | 12 | 20 |

| User Segment | Version A | Version B | Version C | Version D | Version E |

|---|---|---|---|---|---|

| Organic search | 2 | 3 | 4 | 1 | 7 |

| Referral traffic | 11 | 5 | 10 | 12 | 6 |

| Direct traffic | 14 | 15 | 13 | 9 | 8 |

| User Segment | Version A | Version B | Version C | Version D | Version E |

|---|---|---|---|---|---|

| NZ users | 9 | 6 | 4 | 7 | 8 |

| Overseas users | 5 | 2 | 3 | 1 | 10 |

Analysing the "Pages per session" metric

The A/B test results showed that:

- none of the options performed well for users with mobile devices

- versions C, D, and E performed slightly better for tablet users

- for users on desktop-style machines, all of the versions performed about the same

- for users that came to the site from search engines like Google (as opposed to referrals or direct traffic), variations A, B, C, and D performed in a similar way to the original homepage, and performed the best overall compared to the other results we explored for this metric

- all variations, including the current home page, performed more or less the same for both New Zealanders and visitors from overseas — if anything, a few of the variations worked better for overseas visitors.

Other observations

Here’s some of the other things I saw in the complete set of data we collected.

- Overseas users spend a lot longer exploring the site — is this due to not being familiar with government in NZ or not having English as a first language?

- Variation D, which had promo stories on the left, didn’t work well on mobile devices — probably because the promo stories appeared above the main links on the homepage and people had to scroll too much to find anything they thought was useful

- Users visiting the site on a tablet were more likely to click links on the main part of the homepage, when the promo stories were on the left side of the page. I think this is due to the majority of people being right-handed, and turning their tablets to look at the site in landscape view — that’s something we need more research on!

- Variation B and C, which had the promo stories on the right, led more users to find and click links to agency sites; both of these versions worked better for mobile users.

What next?

I’ve presented the high-level results to the Govt.nz team and now we’re going to decide what changes we’ll make to the site. Product Manager Victoria Wray will talk about that in a coming post.

A, B, C is one thing, but what about U?

What’s your experience with A/B testing? Did you do an experiment and discover results that were both interesting and unexpected? Is there an experiment you’d like Govt.nz to do in the future? You’re welcome to leave your thoughts below.